Correlation coefficient

Definition

Given two random variables X and Y, Pearson’s correlation coefficient ρ is defined as the ratio between the covariance of the two variables and the product of their standard deviations:

where are the means and standard deviations of X and Y. The correlation coefficient is usually used as a measure of the strength of the linear relation between the two variables. Substituting the values of the covariance and standard deviations computed from sample time series gives the sample correlation coefficient, commonly denoted as r:

where

Alternatively, r can also be written as

Testing for significance

To test for the significance of the estimated correlation coefficient against the null hypothesis that the true correlation is equal to 0, one can compute the statistic

which has a Student’s t-distribution in the null case (zero correlation) with N-2 degrees of freedom. For instance in Matlab, you can compute the p-value using the function tcdf(). In particular, p = 2*tcdf(-abs(t), N-2) will give you the p-value for a two-tail t-test for a given t.

Alternatively, one can also convert the correlation coefficient using Fisher transform, given by

approximately follows a normal distribution with mean

and standard deviation

. With this, a z-score can now be defined as

Under the null hypothesis that r = r0 and given the assumption that the sample pairs are independent and identically distributed, z follows a bivariate normal distribution. Thus an approximate p-value can be obtained from a normal probability table.

One can also use the Fisher transformation to test if two correlations r1 and r2 are significantly different by computing the z-score using the formula:

which is distributed approximately as N(0,1) when the null hypothesis is true. Here

and

are the number of samples used to compute

and

, respectively.

Incremental algorithm to compute the correlation coefficient

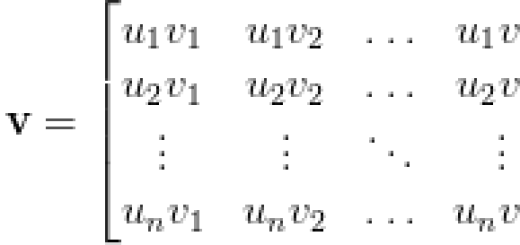

Define as follows:

and

The correlation coefficient can now be written as

To estimate the correlation coefficient incrementally, use the following algorithm:

Initialize n = 1:

for n = 2 to N, compute

Re-compute r using the above equation

end